Researchers at the University of Twente have pioneered a breakthrough that could significantly lower the barriers to practical and cost-effective quantum computing. By enhancing the quality of light particles, known as photons, they have made strides towards a more efficient form of quantum computing that uses light. These advancements have been recognized in the respected journal Physical Review Applied and mark a significant step forward in the field.

The race to realize the potential of quantum computing is intensifying, with substantial investments from industry leaders and governments worldwide. The main challenges hindering progress are the quantity and quality of qubits—the fundamental units of quantum computation. The team’s innovation promises to tackle these issues by favoring high-quality qubits over higher quantities, delivering enhanced computing power and pushing us closer to a world transformed by quantum technologies, from the development of groundbreaking medications to the assurance of secure communications.

Key Takeaways

- Advancements in photon quality mark progress in quantum computing efficiency.

- The new research exchanges qubit quantity for quality, enhancing computing power.

- The technology is crucial for the advent of future photonic quantum computers.

Enhanced Photons for Quantum Computing Precision

In quantum computing, achieving a pure quantum state with photons plays a critical role. With Twente researchers advancing an early-stage error correction technique, the quality of photons used in this realm has seen notable improvement. By filtering out subpar photons, the team has ensured that only the most superior ones contribute to the quantum information processing, which is essential for error-free calculations.

The precision of photon qubits is fundamental to the functionality of quantum processors (QPUs), impacting how well they can interpret molecular properties or tackle complex simulations like the Variational Quantum Eigensolver (VQE), which investigates the energy levels of systems such as lithium hydride (LiH). This refined approach to photon selection also lessens the reliance on physical photons to create a stable qubit, setting the stage for more economical and widely accessible quantum computers—a significant stride forward especially for technologies like trapped ions, superconducting systems, and neutral atoms employed in quantum state manipulation.

Filtering without Knowing the Problem

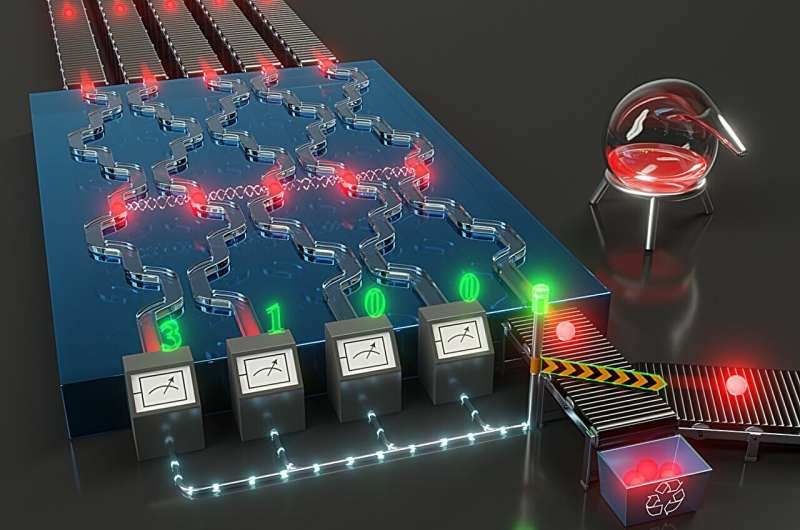

A groundbreaking error mitigation technique for quantum computing involves a sophisticated approach to obtaining high-quality photons. Researchers innovated an optical circuit utilizing programmable light guides and detectors to sort the desirable photons from a mixture containing many imperfect ones.

Quantum error correction often deals with errors after they occur, but this new method operates proactively. It leverages the quantum characteristics of light to produce a state akin to Schrödinger’s cat scenario, where photons are biased towards adopting optimal traits. A photon’s final quality is determined upon measurement, ensuring only those with the necessary “good” attributes are selected.

This error correction process is akin to a sifting mechanism, where numerous lesser photons are discarded to secure a single, flawless one. Although this seems counterintuitive, it conserves resources as it lowers the overall photon requirements, leading to more resource-efficient and cost-effective quantum computing practices.

The technique’s novelty lies in its preemptive error detection capability. Typically, filtering mechanisms require prior knowledge of the defect being targeted; however, this new method can isolate perfect photons without prior knowledge of the error’s nature. This innovation represents a significant stride in quantum circuits and could serve as a milestone in the development of quantum error-correcting codes and surface codes, essential for constructing reliable quantum computers. The possibility to filter accurately without advance specifics disrupts conventional error correction techniques and brings us closer to practical qudit-based quantum computing.

In Every Future Quantum Computer

The reliability of quantum computing heavily relies on the integrity of its most basic unit, the qubit. Unlike classical computers where binary bits are the norm, quantum computers operate with qubits which can exist in multiple states simultaneously. This quantum property enables the machines to perform complex calculations at a pace unattainable by classical computers. However, these calculations can quickly become unreliable if not shielded from noise, indicating that a system’s initial steps must be pristine to ensure accuracy.

Teams of researchers in photonics and quantum technology have designed a method that markedly reduces noise at the start of quantum processes. Distilling the quality of photons through interference techniques plays a crucial part in their approach. The error correction method they’ve developed is revolutionary for photonic quantum computers, promising to secure the logical qubits needed for error-free operation.

They leverage interference to steer photons more accurately, positioning themselves at the forefront of the quest for scalable and universal quantum computing. Notably, the groundbreaking discovery from the University of Twente sits at the nexus of this effort. This advancement is not just vital; they stress that it is inevitable for practical photonic quantum devices that aim for fault tolerance.

Their work is particularly significant when applied to fields like quantum chemistry, where chemical accuracy is paramount. By using a hybrid algorithm, they could merge the best of quantum and classical computing strengths, leading to more efficient solutions in problems too intricate for traditional computers alone.

Four researchers, spearheaded by Dr. Jelmer Renema, published this indispensable research. Their contributions hint at a future where these error-correcting techniques are woven into the fabric of every quantum computer, ensuring that accurate and efficient quantum computing becomes a reality.

Common Questions About Quantum Error Correction

How Quantum Error Correction Bolsters Quantum Computing Stability

Quantum error correction (QEC) is pivotal for the stability of quantum computing operations. It employs redundancy in encoding quantum information, which enables the identification and correction of errors in quantum bits (qubits). This process is similar to proofreading a text to ensure its accuracy, thus allowing quantum computations to run with fewer mistakes.

Challenges in Applying Quantum Error Correction

Implementing QEC in quantum computers faces several hurdles. Notably, QEC requires numerous additional qubits to encode information redundantly, and it involves complex gate operations that must be executed with extreme precision. The smallest of disturbances can result in errors, making the implementation a delicate process.

Influence of Quantum Error Correction Threshold on Accuracy

The QEC threshold is a critical concept indicating the error rate below which a quantum computer can correct more errors than are created during computations. If the error rate surpasses this threshold, the accuracy of computations declines. Staying beneath the threshold ensures that computations remain accurate, and the quantum computer can perform complex tasks reliably.

Techniques to Minimize Quantum Error Correction Costs

There are strategies to decrease the resources needed for QEC. Researchers from Twente have developed a method that reduces the number of photons needed for this process, which lightens the load of error correction and can make quantum computers more feasible to build and operate.

Quantum Error Correction’s Role in Enhancing Quantum Sensing

QEC not only benefits computing but also improves the performance of quantum sensing devices. By correcting errors that can lead to signal degradation, quantum error correction enhances the sensitivity and reliability of measurements in quantum sensors, leading to more accurate results in various applications, from navigation to medical imaging.

Comparing the Efficiency of Quantum Error Correction Techniques

An array of quantum error correction algorithms exists, each with unique advantages and challenges. While some focus on correcting as many errors as possible, others aim to do so with minimal resource expenditure. The efficiency of these algorithms can be assessed by considering factors like the number of extra qubits required and the complexity of the error-correction process.